Introducing MetaFragments, a common format for timed metadata in HTML

MetaFragments gives to web tools, mobile apps, browser and search engines a simple way to explore, connect and share the inside content of web videos.

Even when liberated from Flash, web videos are data dead-ends. Meta-data for video or audio is mostly non-existent in exposed, usable form.

When it exists, it’s segregated in various silos: external files in non-browser friendly formats, APIs, JavaScript code…

That leads to bad findability inside the website, and bad search engine optimization. It makes it hard to connect similar stories, to cite a specific sequence in a longer video, or simply to link back to a precise phrase in the video

A standard way to expose timed meta-data in web pages can benefit a large range of web creators and users.

Video bloggers, mega-platforms like YouTube, news organizations betting on web videos as a rising media will get better findability and engagement.

Viewers will get new ways to find, share and connect to videos across the web.

How it works

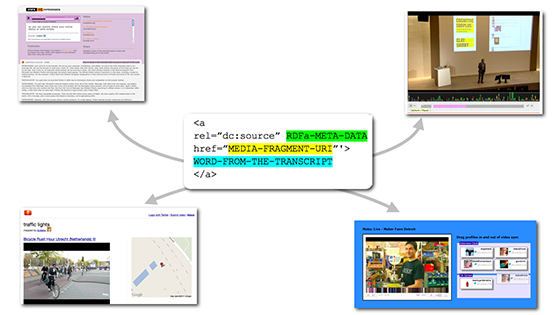

The idea behind MetaFragments is to use media fragments URIs and in-HTML meta-data to store timed data relating to audio and videos sequences

Everything happens in the DOM, so web clients, crawlers and javascript libraries can easily find and use this data without prior knowledge of the website or its API. They just need to know existing standards.

MetaFragments is only one week old, so obviously it is a work in progress. We encourage you to read more about its proposed specifications here: MetaFragments Draft.

But the great news is that we are already rallying supports from Knight Mozilla Lab participants.

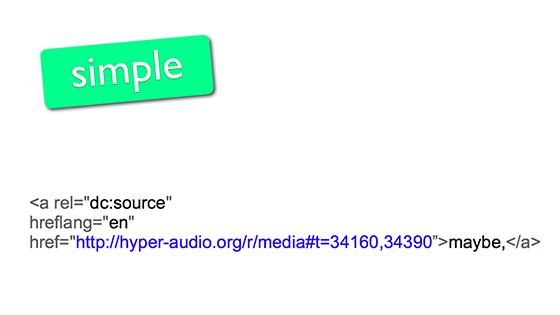

The Hyperaudio Pad tool from Mark Boas adopted it as its working format in its basic form, without RDFa.

Samuel Huron’s data visualization video player and Nicholas Doiron's FollowFrost linked video interview player are the next candidates – if MetaFragments works well with their data-enriched viewers, it will give them a free HTML API for search engine crawlers and other apps. It could help make their apps work together. I’m working on mapping their respective (and different!) internal JSON formats into MetaFragments, and we will iterate from here.

Shaminder Dulai, with its ambitious workflow project for tagging videos in the newsroom is also bringing feedback from a media producer, non-coder, point of view.

Here is the HTML code for the common use case of a word in transcription timed word by word:

In addition to being simple, MetaFragments is also based on existing web standards. We are designing simple and common patterns to apply those standards, sometimes hard to grasp, to real world problem data problems:

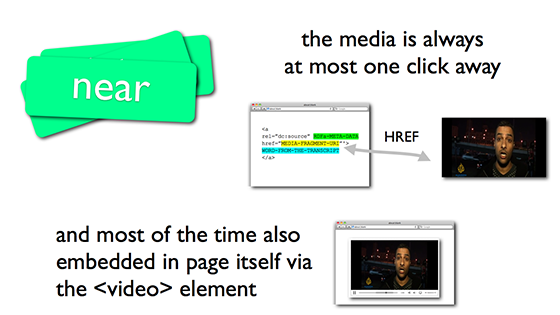

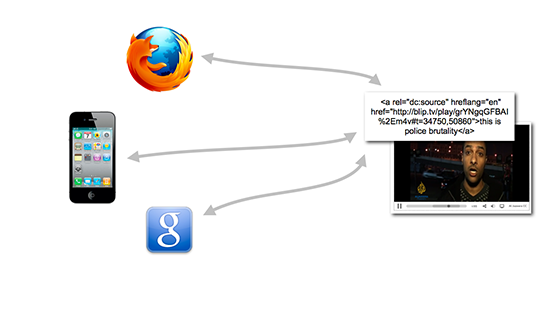

Finally, it's designed with the goal that the meta-data is always at most a click away from the resource being described, for humans and for apps alike:

I publish video, what do I gain?

Are you a video or audio creator? Or maybe you own a big video website?

When you implement MetaFragments, your HTML pages effectively become the data API of your video site.

What does that mean? It means that any web client –like a browser, but also an iPhone or Android app, or the crawlers from Google or Yahoo– can make sense of the data in your video, without prior knowledge of your site.

This allow your viewers to spread further your video stories, automatically accompanying the usual link with citations, names, and more infos that they previously would have had to type manually.

You also can use MetaFragments to index your own videos, even across multiple different platforms and website. That way, it will allows you, or your web community, to cross references and find hidden stories in your own footage.

Who will benefit from it?

Journalists, both professional and citizen, can easily reference and find specific sequences in audio and video medias, assembling stories with simpler online tools.

Media publishers have a standard way to make their video and audio content visible in the HTML, driving traffic and engagement.

developers get a straightforward cross browser method of exposing media metadata and the ability to link, assemble and integrate media fragments into their applications.

Artists can find inspiring video and audio recordings on the web and integrate creative commons specific sequences into their works, both manually and in new, automated, ways not possible today.

Scientists and academics can analyze, find correlations and more easily references medias with word-level precision when a MetaFragments transcript is present.

What’s next and what do we need?

MetaFragments is in the early draft stage. We need to refine it – for example add simple, clean and useful RDFa patterns

The right process for refining this standard is to continue to involve the community, and put it to the test in real world applications: popcorn.js web apps, video platforms, news websites and CMS plugins.

Working on MetaFragments also revealed the lack of easily usable vocabularies for timed and non-verbal informations in videos. We need to specify simple and quick to implement vocabularies for emotions, gestures, when they don't exist.

In term of adoption into CMS and frameworks, we need to foster the development of a Worpress plugin, a Drupal module and a Popcorn.js plugin.

With the support of the open source community and of video platforms and video website owners, MetaFragments can leverage the millions of video and audio reports by citizens, bloggers and journalists and turn them into data-rich stories.

Update: I added the MetaFragments equivalent of the YouTube subtitles to this post. That means that there is a machine readable version of the subtitles available directly in the page. You can take a look at them by viewing the source of this page